Parallel Computing

Homework Help & Tutoring

We offer an array of different online Parallel Computing tutors, all of whom are advanced in their fields and highly qualified to instruct you.

Parallel Computing

As our societies are becoming more and more digital, computers are involved in nearly all business operations. This leads to more data stored and processed, and applications needed to operate on very large data structures. As businesses and organizations evolve, they base their decisions on the processing of enormous databases with often hundreds of millions of records.

Although modern computers offer good processing speed and high amounts of RAM at low cost, they require vast amounts of time to solve these large space problems. A ubiquitous solution for performance problems is to use several machines working together, a.k.a. parallelism. Some basic knowledge of parallel programming must be a requirement for any contemporary IT professional. You will certainly face tasks related to this in any Computer Science degree program.

The case for parallelism

Moore’s law was formulated as early as 1965 by IBM researcher Gordon Moore, and it states that the available computing power on a single machine will double every two years[1]. Most experts were skeptical about his statement, but it held remarkably well over the subsequent IT boom for the following 50 years[2]. This evolution was instrumental in bringing PCs into the lives and homes of the population. The last year or so has, however, seen a decline in the rate, and most industry watchers now declare the law dead[3]. Computing power will naturally continue to grow, but likely not at the same pace. On top of this, the amount of data used in modern organizations is growing. More complex operations and more data being stored and rendered at higher resolutions all lead to more time- and memory-consuming programs. A way to make them faster is to utilize several CPUs.

Parallel programming realms

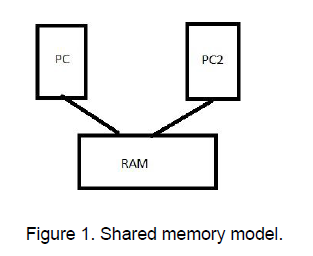

One usually makes a distinction between shared memory and distributed memory applications. In shared memory, all machines read and write from the same RAM; See Figure 1:

Figure 1. Shared memory model.

The shared memory model allows for less communication than does distributed memory, but the risk of overwriting data or dealing with the same records at the same time means that a protocol for avoiding simultaneous accesses must be implemented. This is usually done with a locking procedure. One of the most common packages for shared memory is Open MP[4]. Alternatively, one can use modern multicore machines and let several of the CPUs work on a problem. For example, in Java one makes use of threading to achieve this[5]. Protection of shared resources is achieved with locking, a technique where the programmer reserves a part of the memory space for a given thread[6].

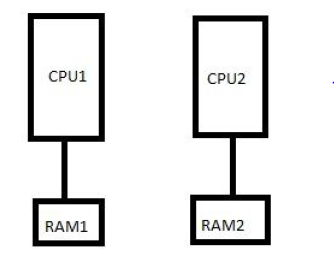

The other situation occurs when each CPU has their own memory space, also called a distributed model. See Figure 2:

Figure 2. Distributed memory.

With the distributed memory model, the problem of concurrent accesses is no longer present, but there is a need to synchronise results; so there is added overhead in the form of communication between the processors. The most prevalent distributed memory package goes by the name of MPI (Message Passing Interface).

Benchmarks

The common success criterion is called speedup, defined as how much faster the program runs in parallel, or the inverse of the relationship between the processing time on a single machine and that when using multiple CPUs[7]. In an ideal world, one would get perfectly linear speedup, but this doesn’t happen in reality, especially because of the overhead. There is a theoretical limit to the speedup called Amdahl’s Law, explained in detail by Jenkov[8].

References

[1] Moore, Gordon E: Cramming more Components onto Integrated Circuits. Electronics Magazine, 1965.

[2] Sneed, A.: Moore's Law Keeps Going, Defying Expectations. Scientific American, 2015.

[3] Waldrop, Mitchell M.: The Chips Are Down for Moore’s Law. Nature, 2016.

[4] www.openmp.org. The OpenMP API, 2016

[5] Singh, C.: Multithreading in java with examples. Beginners Book, www.beginnersbook.com

[6] Friesen, J.: Understanding Java threads, Part 2: Thread synchronization. Java World, 2002.

[7] Parallel Computing http://mathworld.wolfram.com/ParallelComputing.html Wolfram Mathworld

[8] Jenkov, J.: Amdah’s Law. http://tutorials.jenkov.com/java-concurrency/amdahlslaw.html

To fulfill our tutoring mission of online education, our college homework help and online tutoring centers are standing by 24/7, ready to assist college students who need homework help with all aspects of parallel computing. Our computer science tutors can help with all your projects, large or small, and we challenge you to find better online parallel computing tutoring anywhere.

College Parallel Computing Homework Help

Since we have tutors in all Parallel Computing related topics, we can provide a range of different services. Our online Parallel Computing tutors will:

- Provide specific insight for homework assignments.

- Review broad conceptual ideas and chapters.

- Simplify complex topics into digestible pieces of information.

- Answer any Parallel Computing related questions.

- Tailor instruction to fit your style of learning.

With these capabilities, our college Parallel Computing tutors will give you the tools you need to gain a comprehensive knowledge of Parallel Computing you can use in future courses.

24HourAnswers Online Parallel Computing Tutors

Our tutors are just as dedicated to your success in class as you are, so they are available around the clock to assist you with questions, homework, exam preparation and any Parallel Computing related assignments you need extra help completing.

In addition to gaining access to highly qualified tutors, you'll also strengthen your confidence level in the classroom when you work with us. This newfound confidence will allow you to apply your Parallel Computing knowledge in future courses and keep your education progressing smoothly.

Because our college Parallel Computing tutors are fully remote, seeking their help is easy. Rather than spend valuable time trying to find a local Parallel Computing tutor you can trust, just call on our tutors whenever you need them without any conflicting schedules getting in the way.